Automate HN 'Who is Hiring' Job Scrapes & Structure with AI

Automatically extract, clean, and structure hundreds of Hacker News job postings monthly, saving hours of manual data entry and providing a centralized, actionable database.

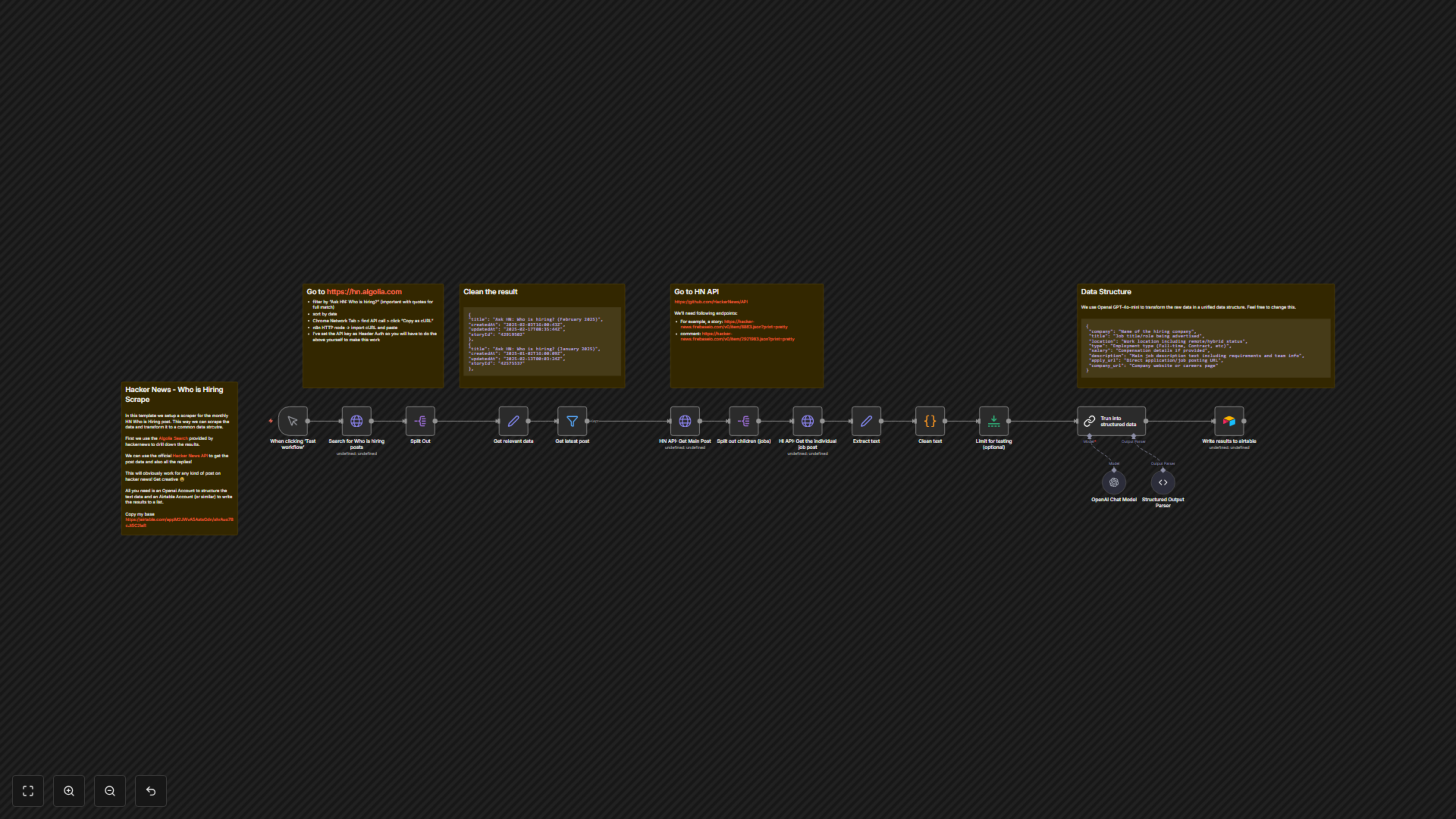

Manually sifting through Hacker News's monthly 'Who is Hiring' posts for relevant job opportunities is time-consuming and unstructured. This workflow automates the extraction of job postings, leverages AI to parse them into a consistent format, and saves the structured data to Airtable.

Documentation

Hacker News 'Who is Hiring' Scraper with AI

This powerful n8n workflow automates the tedious task of monitoring and extracting job postings from Hacker News's popular monthly 'Ask HN: Who is hiring?' threads. Designed for job seekers, recruiters, and market researchers, it transforms unstructured comment data into a clean, organized format using AI.

Key Features

- Automated scraping of Hacker News's 'Who is Hiring' posts for recent opportunities.

- Extracts detailed job information including company, title, location, employment type, salary, description, and application URLs.

- Leverages OpenAI's GPT-4o-mini (via Langchain) for intelligent data structuring from raw job text.

- Automatically cleans and preprocesses raw text data, removing HTML and special characters for higher accuracy.

- Stores all structured job data directly into an Airtable base for seamless filtering, analysis, and further automation.

How It Works

1. Trigger & Initial Fetch: The workflow starts manually, searching Algolia's Hacker News index for recent 'Ask HN: Who is hiring?' posts. 2. Post Filtering: It identifies and filters for the latest monthly 'Who is Hiring' posts, ensuring you only process relevant and recent opportunities. 3. Detailed Post Retrieval: Using the official Hacker News API, the workflow fetches the complete content of the main 'Who is Hiring' posts and all associated child comments, which typically contain individual job descriptions. 4. Text Extraction & Cleaning: Job descriptions are extracted from the raw comments, then rigorously cleaned of HTML tags, special characters, and formatting inconsistencies using a custom code node. 5. AI-Powered Structuring: The cleaned job text is sent to an OpenAI Chat Model (GPT-4o-mini), guided by a predefined schema, to intelligently extract and structure key job details such as company name, job title, location, employment type, salary information, and application URLs. 6. Data Storage: Finally, the consistently structured job data is written to an Airtable base, creating a searchable and analyzable database of job opportunities.