Get Instant AI Answers with Conversational Research Agent

Deliver accurate, context-rich responses in seconds, significantly reducing manual research time and boosting operational efficiency.

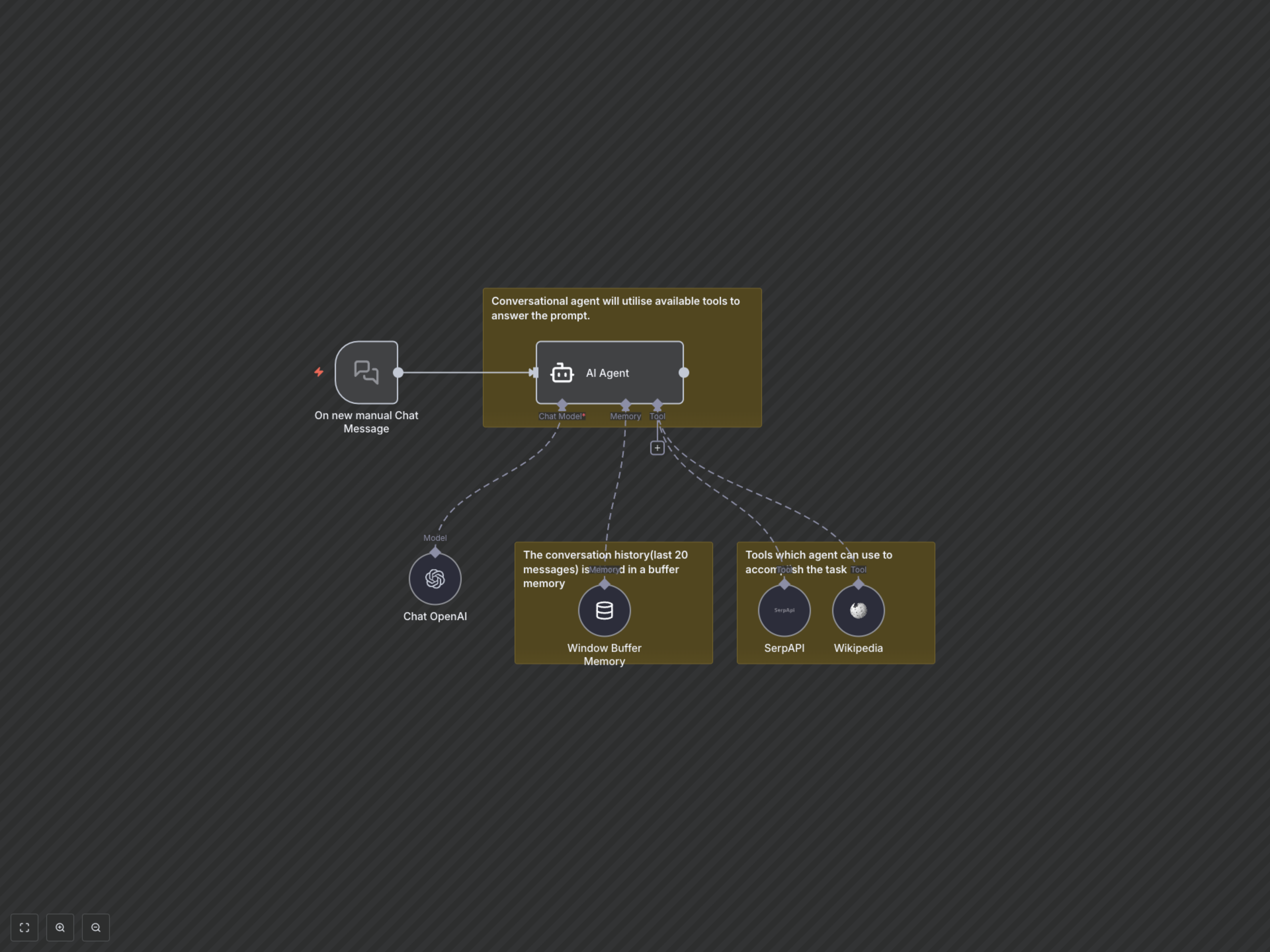

Manually searching for information and maintaining conversational context is slow and inefficient. This workflow creates an intelligent AI agent that provides instant, context-aware answers by dynamically researching topics across the web and Wikipedia.

Documentation

AI-Powered Conversational Research Agent

This powerful n8n workflow creates a smart conversational AI agent capable of understanding user queries, remembering past conversation context, and performing real-time research using various tools. Ideal for support, information retrieval, and interactive knowledge bases, it enhances productivity and user engagement by providing swift, accurate answers.

Key Features

- Contextual Conversations: The agent retains the last 20 messages in a buffer memory, enabling natural, coherent, and context-aware interactions.

- Intelligent Information Retrieval: Dynamically searches the web via SerpAPI and Wikipedia, ensuring access to up-to-date and comprehensive information.

- Powered by GPT-4o-mini: Leverages OpenAI's efficient language model to generate precise, relevant, and human-like responses.

- Flexible Tool Integration: Easily add or remove LangChain-compatible tools to expand the agent's capabilities.

How It Works

Upon receiving a new chat message via the 'On new manual Chat Message' trigger, the workflow activates the 'AI Agent' node. This agent first consults the 'Window Buffer Memory' to integrate the last 20 messages into its understanding, ensuring context is maintained. It then utilizes the 'Chat OpenAI' node (configured with gpt-4o-mini) to process the query. If the query requires external information, the AI Agent intelligently decides whether to use the 'SerpAPI' tool for general web searches or the 'Wikipedia' tool for encyclopedic knowledge, retrieving the most accurate and relevant data to formulate a comprehensive answer.