Optimize Local Ollama LLM Performance with Dynamic Routing

Automate LLM model selection to zero manual intervention, achieving optimal performance and delivering faster, more accurate AI responses from your local Ollama setup.

Manually selecting the right local LLM for diverse tasks leads to suboptimal performance and wasted resources. This workflow automatically analyzes user prompts and dynamically routes them to the most suitable self-hosted Ollama LLM, ensuring optimal responses and robust data privacy.

Documentation

Private & Local Ollama Self-Hosted LLM Router

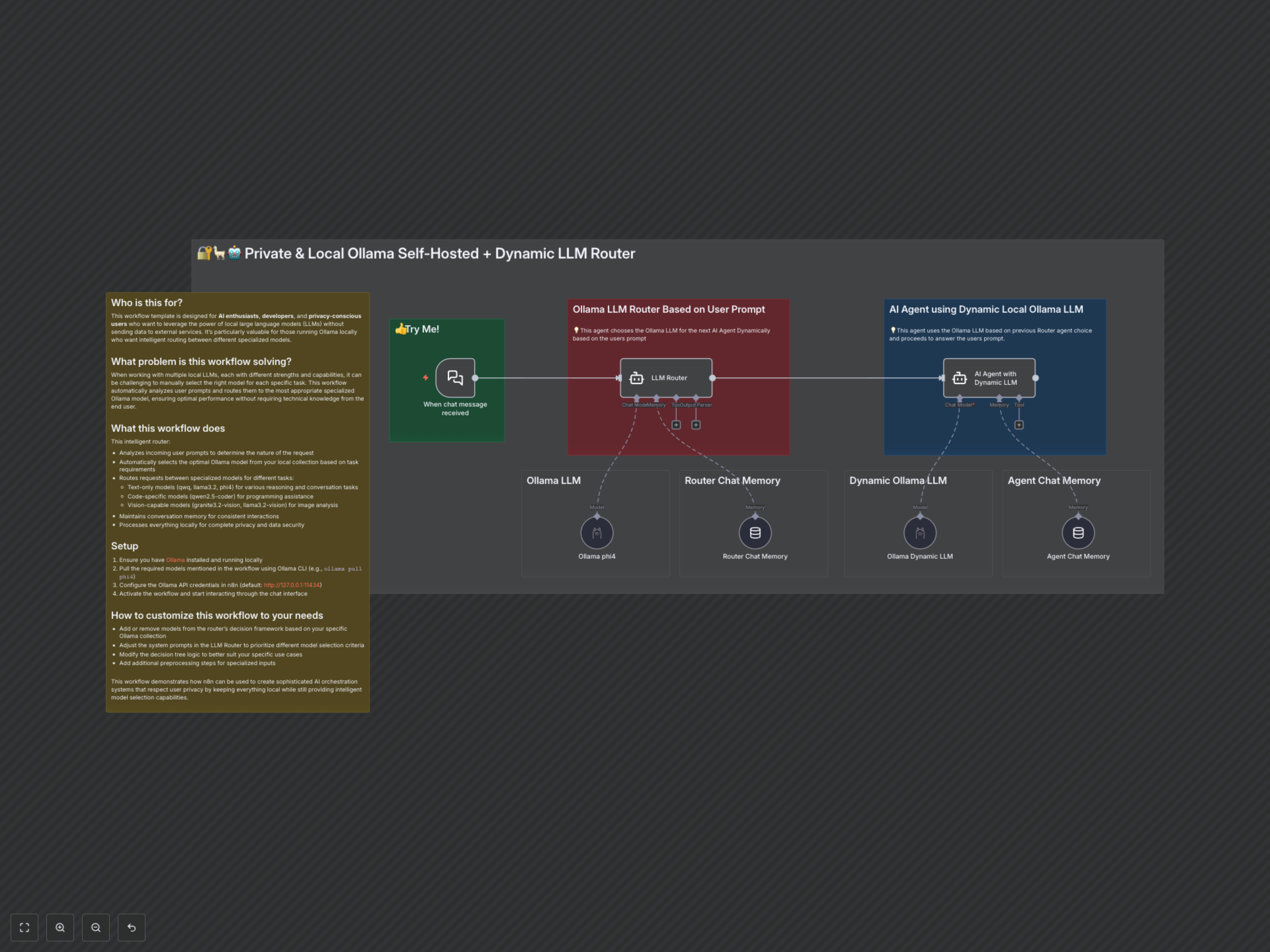

This workflow intelligently routes user prompts to the most appropriate self-hosted Ollama Large Language Model (LLM) based on task requirements, ensuring privacy and optimal performance. It's ideal for AI enthusiasts, developers, and privacy-conscious users leveraging local LLMs.

Key Features

- Automated, intelligent routing of user prompts to specialized local Ollama models.

- Supports diverse tasks including complex reasoning, multilingual conversations, coding, and vision-based analysis.

- Ensures complete data privacy and security by processing all interactions locally.

- Maintains conversation context with integrated chat memory for consistent interactions.

- Customizable decision framework to adapt to your specific Ollama model collection and use cases.

How It Works

Upon receiving a chat message, the workflow's intelligent LLM Router agent analyzes the user's prompt against a predefined decision framework. It dynamically selects the best-suited Ollama model from your local collection (e.g., 'qwq' for reasoning, 'qwen2.5-coder:14b' for code, 'granite3.2-vision' for chart analysis). The selected model then processes the request, maintaining chat memory for context, and delivers an optimized response, all within your local environment.